DON'T BUY - Mine got Overheated with no chance of recovery. Bad thermal design.

Recovery Mode

Forgot Password

Missing Back button

Wifi - Hidden and MAC address

Screenshot button missing

This notes may apply to Anroid 11 mobiles in general, although the notes are based on my Nokia 8.3. This is because the Nokia 8.3 is running Android One, which is supposed to be an unaltered version of pure Android.

Recovery Mode

===============

To enter recovery mode:

- plug in USB-C cable

- turn off the phone

- Press Volume Down AND Power button and hold for a few seconds.

Once in Recovery mode, the options are:

- START

- Restart Bootloader

- Recovery Mode

- Power Off

- Boot to FFBM

- Boot to QMMI

-

Forgot Password

=================

This will WIPE ALL DATA

- Go to Recovery Mode as mentioned above.

- Select Recovery Mode. The Android logo is shown

- Unplug the cable

- Press the Power and the Volume Up key once

- The Recovery Mode menu will show many options

- Choose the Wipe Data / Factory Reset

Missing Back button

====================

By default, Android 11 hides the 3 usual buttons, including the Back button.

To enable these 3 buttons on Nokia 8.3 Android 11:

- go to Settings -> Accessibility -> System Navigation

- Choose between:

Gesture Navigation

3 button Navigation (includes the Back, Home, Apps buttons)

Wifi - Hidden and MAC address

================================

Two important items when connecting to a Wifi on the phone is:

- using a Hidden wifi network

- using the Device MAC address, NOT Randomized Mac.

Unfortunately modern Android phone deliberately make these two security features very hard by HIDING the relevant settings.

On a totally new Android phone, with no SIM card, adding a Wifi network also seems to be a challenge. I forgot the exact details, but persevere and click through all possible options to add the first wifi network.

Before configuring the phone, ensure to

i) change the network Wifi settings, to be it NOT HIDDEN temporarily.

ii) Add the MAC address of the phone

Then go into the phone and start Configuring:

- go to Settings -> Network and Internet -> Wifi

- Click Add Network at the bottom.

- Type in SSID, Security option eg WPA2, Password

- Click Advanced Options

--- In Hidden Network, change to 'Yes'

--- In Privacy, change to 'Use device MAC'

- Click Save and Connect.

Go back to network Wifi and change back to:

- Hidden Network enabled

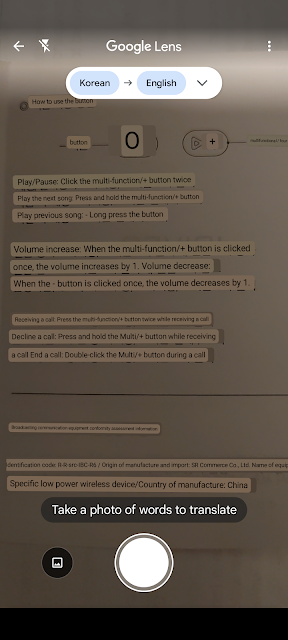

Screenshot button missing

=============================

In previous Android or other handset, the screenshot features is available in two places:

1. Swiping down from the Top of the screen will reveal apps settings like WiFi connection, GPS, HotSpot, Bluetooth, etc, AND Screenshot.

2. Pressing the Power and Volume Down button, will trigger screenshot.

However the above methods is no longer available in Nokia 8.3 running Android 11. It may also be the same for other mobile brands using Android 11.

The Screenshot however is still available in a new spot. This applies to whether using 3-button navigation or Gesture navigation. To trigger Screenshot, simply go to the screen that shows the multiple background apps.

i) Using 3 button navigation, the Background Apps is available by pressing the Squre icon button. Then the words Screenshot will appear on the bottom.

ii) Using Gesture navigation, the Background Apps is available by Swiping upwards from the bottom of the screen. Then the words Screenshot will appear on the bottom.

I can imagine this is a very smart and intuitive location, even though it is very annoying if we got used to the previous methods. It is intuitive because the Screenshot is taken for the whole screen. What better location than to put the Screenshot at the Background Apps area, where we can scroll through different Background Apps to take screenshot for.

NOTE: The word background does not mean wallpaper background. It means the multiple apps that we open, but it is hidden by the recent app. So these background apps are the ones still running but not on the front screen.